(NewsNation) — According to one of the biggest names in artificial intelligence, I don’t work here.

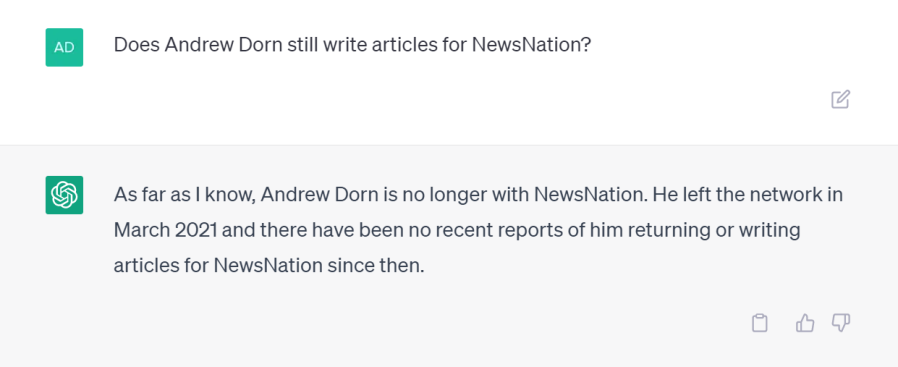

“As far as I know, Andrew Dorn is no longer with NewsNation,” ChatGPT told me.

“He left the network in March 2021 and there have been no recent reports of him returning or writing articles for NewsNation since then.”

That sounds definitive. But there’s one problem: it’s not true. I started at NewsNation in February 2022 and wrote five articles last week.

My experience isn’t uncommon. Artificial intelligence chatbots, like ChatGPT or Google’s Bard, regularly generate responses that are flat-out wrong. Some researchers call these “hallucinations” because the AI is perceiving something that isn’t real.

Experts say it’s a challenge everyone in the industry is trying to address. But it’s still not clear how much of the problem can actually be solved.

That’s because chatbots like ChatGPT “are not actually trained to present truthful information, (they’re) trained to present text that is of human quality intelligence and reasoning capability,” said Chris Meserole, the director of the Brookings Artificial Intelligence and Emerging Technology Initiative.

“It’s a slight distinction but a really important one,” Meserole said.

ChatGPT and Google Bard are built on large language models, or LLMs — a type of AI that learns skills by analyzing massive amounts of text.

LLMs use tons of data to spot patterns in phrases in order to predict what words come next. Those predictions are how AI applications generate sentences that sound like a human wrote them.

Sometimes, that can result in realistic sentences with false information.

Those mistakes won’t matter much to someone who’s using an AI chatbot for fun. But they could make a big difference to corporations who are rushing to implement the new technology.

For instance, Meserole said, what if a bank uses an LLM to provide insight on the stock market? Any errors could have real consequences.

Now imagine what happens with an AI hallucination in a healthcare or legal setting. The outcome could be the difference between life and death.

As AI platforms continue to evolve, the hallucination issues are expected to improve. But no one knows if they can be eliminated entirely.

Shortly after its release, OpenAI CEO Sam Altman told users not to rely on ChatGPT “for anything important” and warned of the chatbot’s ability to “create a misleading impression of greatness.”

But in March, a group of prominent computer scientists called for all AI labs to “immediately pause” the training of AI systems for at least six months in order to better understand the potential risks to “society and humanity.”

On Saturday, billionaire investor Warren Buffet compared the rise of AI to the creation of the atomic bomb — an important technology where the long-term consequences were hard to know early on.

Tech CEO Adam Wenchel said it’s not enough to accept hallucinations as unavoidable quirks. His AI monitoring platform, Arthur, just released a firewall to help detect and block harmful or inaccurate responses.

Wenchel said businesses need tools to actively intervene before errors are made.

“You need to actually take responsibility for (hallucination) and prevent prompts,” he said. “These decisions can have health and life altering types of outcomes.”